The purpose of this article is to show you the results testing the integration of a

Big Data platform with other

Geospatial tools. It is necessary to stand out that the integration of used components, all of them are open source, allow us to publish WEB services compliant with

OGC standards (WMS, WFS, WPS).

This article describes installation steps, settings and development done to get a web mapping client application showing NO2 measures of around 4k of European stations during four months (Observations were registered per hour), around of 5 million of records. Yes, I know, this data doesn't seem like a "Big Data" storage, but it seems big enough to check performance when applications read it using spatial and/or temporal filters.

The article doesn't focus on teach deeper knowledge of used software, all of them already publish good documentation from user or developer point of view, it simply wants to offer experiencies and a simple guide to collect resources of used software components. By example, comments about GeoWave, and its integration with GeoServer, are a copy of content of product guide in its website.

Data scheme

Test data was downloaded from

European Environment Agency (EEA). You can search

here information or map viewers of this or another thematics, or better, you could use your own data.

GDELT is other interesting project that offers massive data.

Scheme of test data is simple, the input is a group of

CSV files (Text files with their attributes separated with commas) with points in geographical coordinates (Latitude/Longitude) that georeference the sensor, the measure date, and the NO2 concentration in air. There are other secondary attributes but they aren't important for our test.

Software architecture

Test consists of chain a set of tools, all of them offer data and funcionality to next software component in the application architecture. The application workflow starts with Hadoop and its HDFS, HBase to map it like a database, the great GeoWave working as a connector between it and the popular GeoServer that implements several OGC standards, and finally, a web client application fetching data to show maps as usual (By example, using Leaflet and Heatmap.js library).

Apache Hadoop

Apache Hadoop is, when we search a bit on Google, ...

a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-availability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-available service on top of a cluster of computers, each of which may be prone to failures.

HDFS is a distributed file system that provides high-performance access to data across Hadoop clusters. Because HDFS typically is deployed on low-cost commodity hardware, server failures are common. The file system is designed to be highly fault-tolerant, however, by facilitating the rapid transfer of data between compute nodes and enabling Hadoop systems to continue running if a node fails. That decreases the risk of catastrophic failure, even in the event that numerous nodes fail.

Our test will use Hadoop and its HDFS as repository of data where we are going to save and finally publish to end user application. You can read project resources

here, or dive on Internet to learn about it deeply.

I have used Windows for my tests. The official Apache Hadoop releases do not include Windows binaries, but you can easily build them with this great

guide (It uses

Maven) and to configure the necessary files at least to run a single node cluster. Of course, a production environment will require us to configure a distributed multi-node cluster, or use a "just-to-use" distribution (

Hortonworks...) or jump to the Cloud (

Amazon S3,

Azure...).

We go ahead with this guide; after Hadoop was built with Maven, configuration files were edited and environment variables were defined, we can test if all is ok executing in console...

> hadoop version

Then, we start the "daemons" of namenode and datanode objects, and the "yarn" resource manager.

> call ".\hadoop-2.8.1\etc\hadoop\hadoop-env.cmd"

> call ".\hadoop-2.8.1\sbin\start-dfs.cmd"

> call ".\hadoop-2.8.1\sbin\start-yarn.cmd"

We can see the Hadoop admin application on the configured HTTP port number, 50,070 in my case:

Apache HBase

Apache HBase is, searching on Google again, ...

a NoSQL database that runs on top of Hadoop as a distributed and scalable big data store. This means that HBase can leverage the distributed processing paradigm of the Hadoop Distributed File System (HDFS) and benefit from Hadoop’s MapReduce programming model. It is meant to host large tables with billions of rows with potentially millions of columns and run across a cluster of commodity hardware.

You can read

here to start and to install HBase. Again, we check product version executing...

> hbase version

Start HBase:

> call ".\hbase-1.3.1\conf\hbase-env.cmd"

> call ".\hbase-1.3.1\bin\start-hbase.cmd"

See the HBase admin application, on 16,010 port number in my case:

Ok, at this point, we have the big data environment working, it is time to prepare some tools which append Geospatial capabilities; GeoWave and GeoServer, we go ahead...

LocationTech GeoWave

GeoWave is a software library that connects the scalability of distributed computing frameworks and key-value stores (Hadoop + HBase in my case) with modern geospatial software to store, retrieve and analyze massive geospatial datasets.

Wow! this is a great tool :-)

Speaking from a developer point of view, this library implements a vector data provider of

GeoTools toolkit in order to read features (geometry and attributes) from a distributed environment. When we add the corresponding plugin to

GeoServer, user will see new data stores to configure new supported distributed dataset types.

Let's leave GeoServer for later. According to GeoWave user and developer guides, we have to define primary and secondary indexes that layers want to use, then we can load information to our big data storage.

Reading in developer guide, we will build with Maven the GeoWave toolkit in order to save geographical data on HBase:

> mvn package -P geowave-tools-singlejar

and the plugin to include in GeoServer:

> mvn package -P geotools-container-singlejar

I have defined my own environment variable with a base command in order to execute GeoWave processes as comfortable as possible:

> set GEOWAVE=

java -cp "%GEOWAVE_HOME%/geowave-deploy-0.9.6-SNAPSHOT-tools.jar"

mil.nga.giat.geowave.core.cli.GeoWaveMain

Now, we can easily run commands typing %geowave% [...]. We check the GeoWave version:

> %geowave% --version

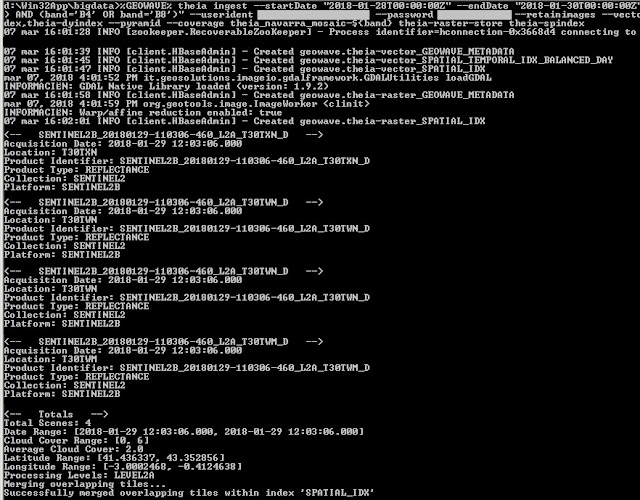

Ok, we are going to register necessary spatial and temporal indexes of our layer. Client application will filter data using a spatial filter (BBOX-crossing filter) and a temporal filter to fecth only NO2 measures of a specific date.

We go ahead, register both indexes:

> %geowave% config addindex

-t spatial eea-spindex

--partitionStrategy ROUND_ROBIN

> %geowave% config addindex

-t spatial_temporal eea-hrindex

--partitionStrategy ROUND_ROBIN --period HOUR

And add a "store", in GeoWave terminology, for our new layer:

> %geowave% config addstore eea-store

--gwNamespace geowave.eea -t hbase --zookeeper localhost:2222

Warning, in last command, 2,222 is the port number where I published my Zookeeper.

Now, we can load data. Our input are CSV files, so I will use "-f geotools-vector" option to indicate that GeoTools inspects which vector provider have to use to read data. There are other supported formats, and of course, we can develop a new provider to read our own specific data types.

To load a CSV file:

> %geowave% ingest localtogw

-f geotools-vector

./mydatapath/eea/NO2-measures.csv eea-store eea-spindex,eea-hrindex

Ok, data loaded, so far no problems, right? but GeoTools CSVDataStore has some limitations when reading files. Current

code doesn't support date/time attributes (nor boolean attributes). code manages all of them as strings. This is unacceptable for our own requirements, the measure date has to be a precise attribute for index, so I fixed it in original java code. Also, in order to calculate the appropiate value type of each attribute the reader reads

all rows in file, it is the safest manner, but it can be very slow when reading big files with thousands ans thousands of rows. If the file has a congruent scheme, we can read a little set of rows to calculate types. So I changed it too. We have to rebuild GeoTools and GeoWave. You can download changes from my own GeoTools

fork.

After this break, let me now return to main path of the guide, we have loaded features in our layer with "ingest" command. We have included the plugin in a deployed GeoGerver instance too (See developer guide, it is easy, just copy the "geowave-deploy-xxx-geoserver.jar" component to "..\WEB-INF\lib" folder and restart).

GeoServer

GeoServer is

an open source server for sharing geospatial data. Designed for interoperability, it publishes data from any major spatial data source using open standards. GeoServer is an Open Geospatial Consortium (OGC) compliant implementation of a number of open standards such as Web Feature Service (WFS), Web Map Service (WMS), and Web Coverage Service (WCS).

Additional formats and publication options are available including Web Map Tile Service (WMTS) and extensions for Catalogue Service (CSW) and Web Processing Service (WPS).

We use GeoServer to read Layers loaded with GeoWave, the plugin just added to our GeoServer will allow us to connect to these data. We can use it as any other type of Layer. Wow! :-)

To configure the access to distributed data stores, we can use two options:

- Using the GeoServer admin panel as usual:

- Using the "gs" command of GeoWave to register Data Stores and Layers in a started GeoServer instance.

Since we are testing things, we are going to use the second option. First step requires to indicate to GeoWave which GeoServer instance we want to configure.

> %geowave% config geoserver

-ws geowave -u admin -p geoserver http://localhost:8080/geoserver

Similar to what we would do with the GeoServer administrator, we execute two commands to add respectively the desired Data Store and Layer.

> %geowave% gs addds -ds geowave_eea -ws geowave eea-store

> %geowave% gs addfl -ds geowave_eea -ws geowave NO2-measures

As you can notice, the spatial reference system of the Layer is

EPSG:4326, remain of settings are similar to other layer types. If we preview the map with the OpenLayers client of GeoServer...

The performance is quety decent (You can see the video linked to the image), taking into account that I am running on a "no-very-powerful" PC, with Hadoop working in "single mode", and drawing the whole NO2 measures of all available dates (~5 million of records). Spatial index works right, as lower zoom as faster response. Also, If we execute a WFS filter with a temporal criteria, we will check temporal index runs right, GeoServer doesn't scan all records of the Layer.

GeoWave user guide teaches us about a special style named "

subsamplepoints" (It uses a WPS process named "

geowave:Subsample" and that GeoWave plugin implements). When drawing a map, this style perform spatial subsampling to speed up the rendering process. I have verified a great performance gain, I recommend it to draw point type layers.

I tested too to load a polygon type layer from a

Shapefile, no problems, WMS GetMap and WFS GetFeature requests run fine. Only a note, GeoWave loading tool automatically transforms geometries from the original spatial reference system (

EPSG:25830 in my case) to EPSG:4326 in geographical coordinates.

At this point, we have verified that everything fits, we could stop here since the exploitation of this data could already be done with standard Web Mapping libraries (

Leaflet,

OpenLayers,

AGOL, ...) or any GIS desktop application (

QGIS,

gvSIG, ...).

¿Would you like to continue?

Web Mapping client with Leaflet

I have continued developing a Web mapping client application with Leaflet. This viewer can draw the map in two styles, drawing an Heatmap or drawing a thematic ramp color. It renders all observations or measures of a unique date, and even animate between all available dates.

Also, we can verify performance with this viewer, it mixes spatial and temporal filter in an unique query. We go ahead.

The easiest option, and perhaps optimal, would have been client application performing WMS

GetMap requests, but I am going to execute requests to GeoServer to fetch the geometries to draw them in the client as we desire. We could use WFS

GetFeature requests with the current map bounds (It generates a spatial BBOX filter) and a propertyIsEqual filter of a specific date. But we shouldn't forget that we are managing Big Data Stores that can create

GML or

JSON responses with huge sizes and thousands and thousands of records.

In order to avoid this problem I developed a pair of WPS processes, called "geowave:PackageFeatureLayer" and "geowave:PackageFeatureCollection", that return the response in a compressed binary stream. You could use another packaing logic, by example, returning a special image where pixels encode geometries and feature attributes. Everything is to minimize the size of the information and accelerate the digestion of it in the client application.

WPS parameters are: first, layer name in current GeoServer Catalog (A "

SimpleFeatureCollection" for the "

geowave:PackageFeatureCollection" process), BBOX, and an optional

CQL filter (In my case I am sending something similar to "

datetime_begin = 2017-06-01 12:00:00").

I am not going to explain the code in detail, it leaves the scope of this guide. If you like, you could study it in the github link at the end of the article.

Client application runs a

WebWorker executing a WPS request to our GeoServer instance. The request executes the "

geowave:PackageFeatureLayer" process to minimize the response size. Then the WebWorker decompresses the binary stream, parses it to create javascript objects with points and attributes, and finally return them to main browser thread to draw. Client application renders these objects using the

Heatmap.js library or drawing on a

Canvas HTML5 to create a thematic ramp color. For this second style, application creates some on-the-fly textures of the colored icons to use when drawing the points. This trick allows map renders thousands and thousands of points pretty fast.

If our client application requires to draw millions of points, we can dive into

WebGL and the great

WebGL Heatmap library or fantastic demos as

How I built a wind map with webgl.

Source code of WPS module and Web mapping client application is

here.

This errors table is applied to decide if a triangle has to be written to output mesh or it has to be splitted to its next child ones. To do not collapse all tiles of lower levels, the process adds more triangles than geometric error indicates, otherwise we would get a squared globe.

This errors table is applied to decide if a triangle has to be written to output mesh or it has to be splitted to its next child ones. To do not collapse all tiles of lower levels, the process adds more triangles than geometric error indicates, otherwise we would get a squared globe.